I write about Quant UX, R, and marketing research, and I review various conference, journal, and book proposals. Lately there has been a surge in papers about LLM applications, such as using LLMs as a source of potential data for product insights.

A common patterns I've seen in research papers about LLM applications is the following :

- First, the authors posit an AI LLM model to [answer questions about X] ... or [solve problem Y] ... or [give quasi-human data about Z].

Here are two examples (I'm making these up, but they're similar to things I've seen several times):

"Marketers often run consumer segmentation studies. What if we used AI to take those surveys instead? How well do AI segments match live respondents?"

"We created an LLM to answer questions about grooming pets. We tested it with 50 prompts about grooming. How good are its answers?"

- Second, the authors then compare the LLM results against another method such as a different data collection protocol (e.g., a consumer survey) or a different rating source (e.g., answers from experts)

In this post, I explain why such work usually does not constitute "research" and it makes uninteresting papers and conference presentations.

Note: this commentary is not about any proposals to Quant UX Con 2024. It's about papers generally. OTOH, perhaps something here will be useful for folks working on proposals for the Quant UX Con CFP.

To be clear, I'm not arguing that LLM applications are bad or useless. Instead, I'm arguing that industry papers claiming to do research about LLM use cases are often extremely misguided in their conceptions of research and statistics. This article is a call-to-action to make them better.

If you'd like an analogy, think about a new Tic-Tac-Toe application. One might have good reasons to create the app and it could be useful (perhaps it runs on a new device for children). However, a paper that compares it to previous Tic-Tac-Toe models is almost certain to be uninteresting as research. Just because something is useful doesn't mean that data and stats about it are interesting.

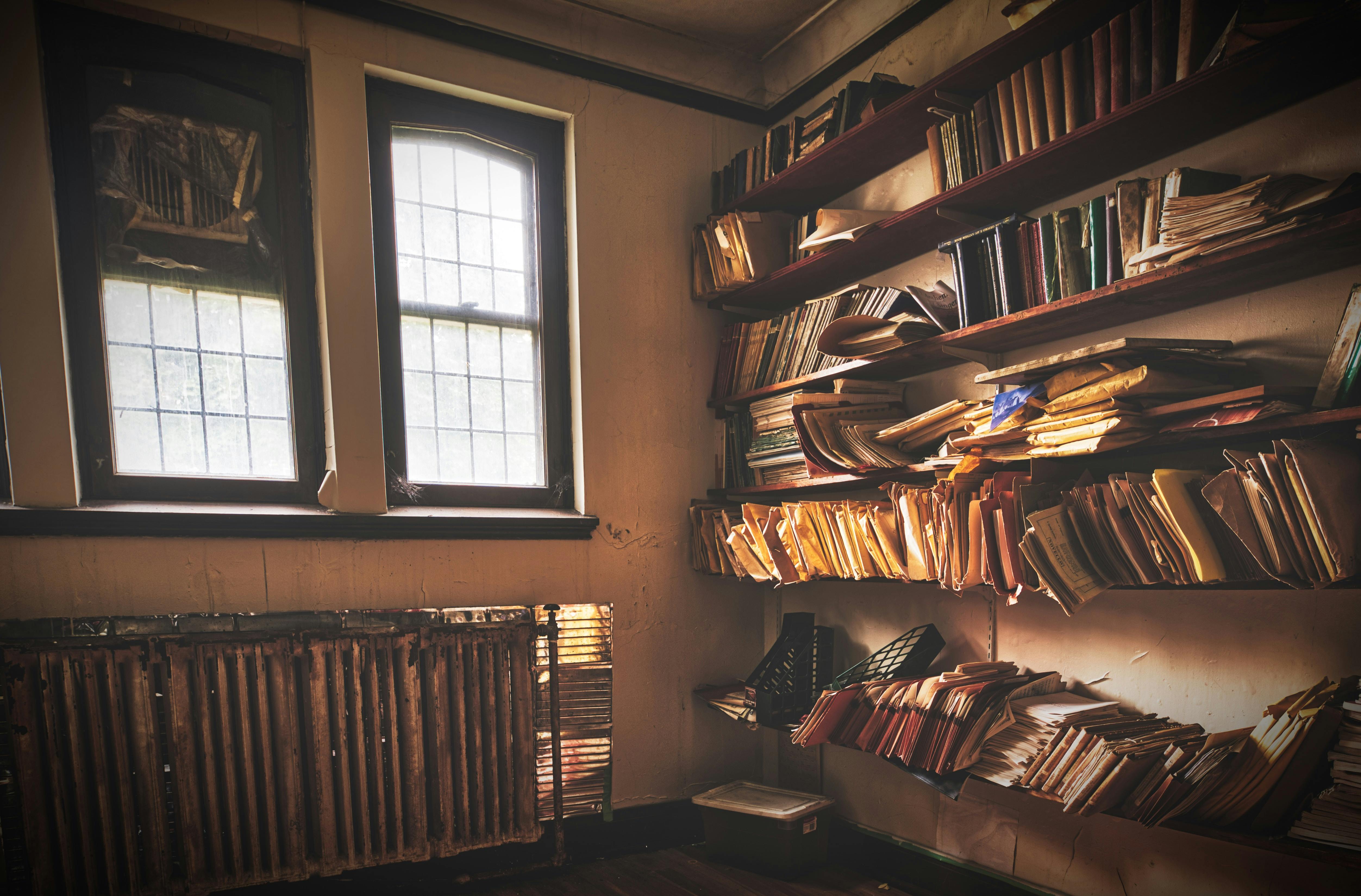

The File Drawer Problem

When reading a paper about the value of an LLM application (or any treatment, for that matter), the first three questions we should ask ourselves are:

Do the authors have a vested interest in the result? For instance, are they the creators of the application?

Were the questions biased (perhaps unknowingly) in a way that guarantees the result?

Is it possible that only positive results are being published, and that negative findings are buried?

The latter is the file drawer effect. It says that published findings tend to be heavily skewed towards positive outcomes, because negative results are unpublished. This is compounded when the authors have an incentive to publish; and compounded again when the authors created the assessment questions or methods themselves.

There's not much else to say here. We should not simply accept one-off claims that an LLM (or anything else) gives a great answer to a complex problem.

The Unstable Unit Problem

When we assess an outcome with and without some intervention (such as using an LLM), it is a variation of a clinical trial that proposes a treatment effect. Statistical analysis of such trials has a core assumption known as the Stable Unit Treatment Value Assumption (SUTVA).

In a nutshell, SUTVA says that the response of each unit — such as each person in a UX study, or each LLM prompt in a study of AI systems — depends only on its own circumstances and does not depend on the responses of other units. SUTVA is one of two necessary foundations of statistics that compare outcomes. It underlies everything from simple descriptive statistics (such as comparisons of means and standard deviations in t-tests) up to complex analyses such as causal modeling.

Unless units are stable and independent, it makes no sense to aggregate them in a statistical model. (BTW, the second necessary foundation is sampling. That may be random sampling or some other kind of sampling — such as matched sampling —that is characterized by a specific model. "Random" is a specific model.)

LLMs violate the Stable Unit Treatment Value Assumption in multiple ways:

LLMs do not have stable definitions. On short time scales, they are unstable due to their stochastic (random) nature and dependence on exact prompts. On medium time scales, they are unstable due to history and spillover effects. On longer time scales, they are unstable due to algorithm updates, UI changes, and training.

LLM answers may depend on other units. Depending on the exact LLM model, prompting regime, and training, an LLM's answers may depend on the output of other systems and on the prior history and sequence of prompts. (Consider IQ testing, which I've discussed separately. Humans are generally unaware of published answers, whereas LLMs are likely to be trained on such literature. A given LLM model may also update itself from prompts or other users' inputs.)

There is no good way to specify a sampling "unit" for an LLM. What exactly is being sampled when a prompt is given to ChatGPT or Bard? How does a particular prompt relate to the entity being sampled?

All of this leads to a core question: when we describe an LLM using statistics, what are we describing? The LLM will be different tomorrow, next week, next month, and next year. Traditional research methods assume that the "thing" being studied is definable and stable ... enough so that it is reasonably close to the SUTVA standard.

When comparing "treatments" such as different systems or prompts, LLMs are not close to the SUTVA standard. Therefore, traditional statistical analyses do not apply to naive sampling of LLMs. And yet too many papers and presentations use those methods. They simply assume that comparisons of outcomes — using t-tests, descriptive statistics, or regression models — will work for LLM assessments just as they do in clinical trials.

The Validity Problem

I've discussed previously that research must demonstrate both convergent and discriminant validity. This is missing from too many papers about LLM applications.

Convergent validity means that some assessment (such as scoring the answers of LLM prompts) should be demonstrated to agree with other methods that assess the same thing. Discriminant validity means that an assessment method should be shown to differ from other methods that ought to differ.

So far, I have seen very few papers about LLM applications that give attention to either convergent or discriminant validity. Typically, what I see is something like this:

A list of prompts and responses

Single-rater assessment of the responses (e.g., by the authors)

... OR, in the case of comparing data sets ...

Statistics about data set A (such as a consumer survey)

Statistics about data set B (such as data created by the LLM)

Comparison of the two data sets with naive statistics (such as a t-test) and without attention to SUTVA as noted above

In both cases, what is missing is systematic attention to the validity of the assessments. Does the LLM perform better than other similar models? Does its answer agree with things it should agree with, and does differ from those that should differ?

Other forms of validity are also neglected too often, such as ecological validity and even face validity. And so are questions of reliability; would one obtain the same results again or with different prompts? (That is similar to the SUTVA discussion above.) I'll leave those discussions for another time.

Why These Papers are Uninteresting

It may be obvious from the above points, but I find such papers to be uninteresting when they do not answer these questions:

Would we expect a different team of researchers obtain the same results, using a different sample and different assessment methods?

Would we get the same results a month from now?

Do the results generalize in any systematic way to other problems?

Were the hypotheses pre-registered so there is not (or less of) a file drawer issue?

Are the results theoretically justified in an interesting way? Or are they merely descriptive ("look what the LLM does")?

Without good answers to those questions, an LLM application may be useful but a description of results from it is not research. Too often, it is instead a statement that amounts to, "We did a thing. It's great, see!"

That's not a problem in the world — which is full of such claims — but in my opinion, it's not enough to be interesting for a research journal or conference.

What to Do Instead

A prescription depends on the exact circumstances but as a general matter, I urge authors to consider the following:

Is there a good theoretical reason to expect that an LLM's answer will be good? Or are you on a fishing expedition to "see what happens"? Observations of interesting effects can be useful to share, but post-hoc statistical tests do not magically convert them into "research".

Do you have hypotheses that were at least informally pre-registered? The underlying problem of backwards hypothesis generation is not unique to LLM research, yet it should always be avoided.

What are the plausible alternative "null" models? An effect size of "better than zero" is not a reasonable comparison, although it is (too often) the default for statistical models such as t-tests and ANOVA. Can you posit alternative, simpler LLM applications that might be plausibly similar to yours? Show that your results are better than those alternatives (this is conceptually similar, in a hand-waving way, to null model comparisons used in structural equation modeling and similar methods).

Can you change the locus of the "treatment effect" to show that it is helpful or interesting generally? For example, instead of assessing the outcome yourself, can you show — in an experimental trial — that the results are helpful to some other sample of people?

Can you show that it is not a point-in-time result? Could the result depend on some particular — and probably black box — algorithm and training set? Do you learn something interesting about LLMs or AI applications in general?

Finally

I hope this inspires you to think critically when reading LLM research ... or conducting it. If so, I'll put in a plug for the upcoming Quant UX Conference in June 2024. Find out more here: https://quantuxcon.org

Thanks for reading!